Designing Conversations for AI

Building AI-powered chatbots with no code, prompt engineering, fine-tuning AI models, and designing conversation flowcharts.

Chatbots have become increasingly popular, thanks to their potential to simulate human-like conversations. However, the quality of conversations a chatbot is capable of, and how well these conversations help the user to complete their tasks is super important in defining the experience the user has with the interface or product.

In designing UX and content, I’m constantly thinking about words; how they are written and used, their usefulness and effectiveness, if they are understood, how they make users feel, how they can be used to help users accomplish tasks and build trust in whatever product I’m working with. This is probably why I’m in the habit of trying to break every chatbot and voice assistant I come across (sorry Alexa and Siri), and why I believe conversation design has a major role to play in crafting the UX of AI interactions.

Conversation design involves crafting the interaction between an interface and a user in such a way that instead of issuing commands to the computer, natural sounding conversations can be had. I mean, if I’m going to be forced to chat with a computer who is obviously 1000x smarter than me, it should at the very least make an effort to have some personality or give me the information I need. For instance, if I’m using a chatbot from a healthcare company, I’d like it to sound friendly, knowledgeable, and caring.

In fact, some benefits of conversation design in the UX of chatbot are:

Memorable experiences: Good quality conversations between chatbots and users help to create memorable experiences for the users. Users are more likely to remember a chatbot that had a personality and was engaging.

Higher engagement: Chatbots with personality are more engaging and can keep users interested for longer periods, leading to higher engagement rates and more conversions.

Improved user satisfaction: Chatbots that are easy to use and understand can lead to improved user satisfaction. By designing UX and content with the user in mind, you can create a chatbot that meets their needs and exceeds their expectations.

Reduced workload: Chatbots can help to reduce the workload of human agents by handling simple and repetitive tasks. By designing a chatbot that can handle these tasks efficiently, you can free up human agents to focus on more complex issues.

With one thought-piece about AI for every 3 posts on my feed, it was finally time to try my hands at building an AI-powered bot…with a personality.

However, building an AI chatbot can be a daunting task, especially for people who don’t have coding experience or fight intrusive thoughts to set their computers on fire when they even think about coding (like me). Fortunately, no-code chatbot builders have made it possible for anyone to create a chatbot with ease and this made the whole experience a little less intimidating.

I explored 3 tools for this case study;

Chat GPT: For practice prompt creation, exploring prompt engineering, and training raw AI models.

Riku.ai: For building a web chatbot using prompt engineering and integrating datasets to fine-tune models.

Landbot.io: For building an Automated WhatsApp chatbot using stories and conversation flowcharts.

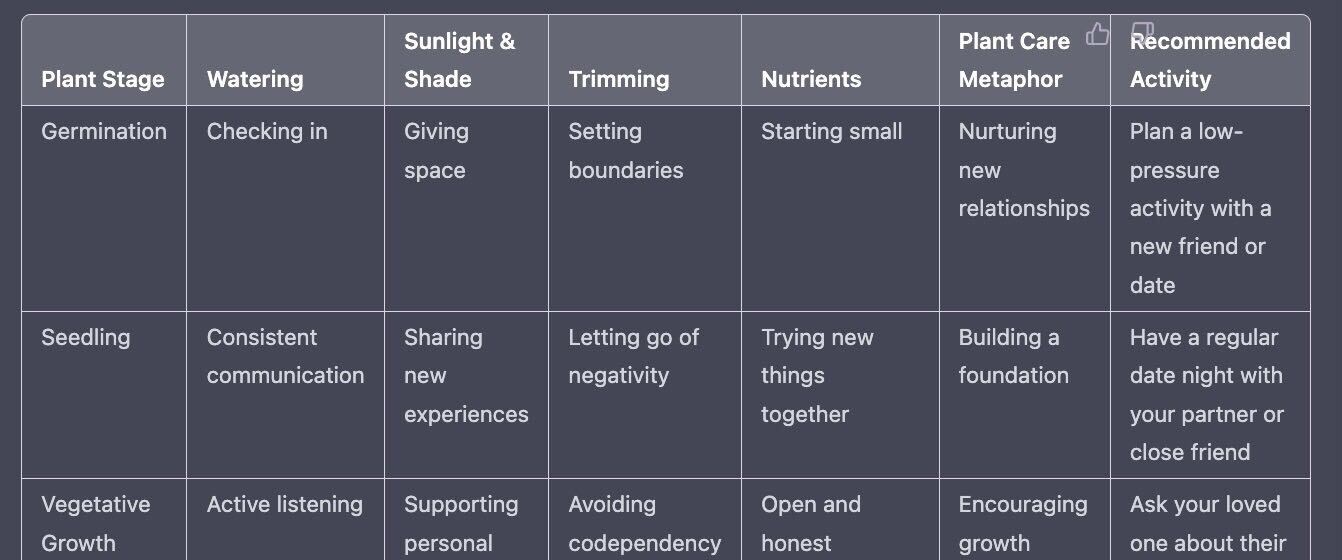

There are several steps involved in designing conversations for your product right after deciding whether or not conversation is the right fit for your feature. The feature I’m currently working on involves recommending activities to users to nurture their relationships in a bid to grow virtual plants.

To get started with building a chatbot, there are a few things to consider:

Gathering requirements

Understanding prompt engineering

Learning how to customize base AI models through few-short learning or fine-tuning

Designing your conversations

The process

Gathering requirements:

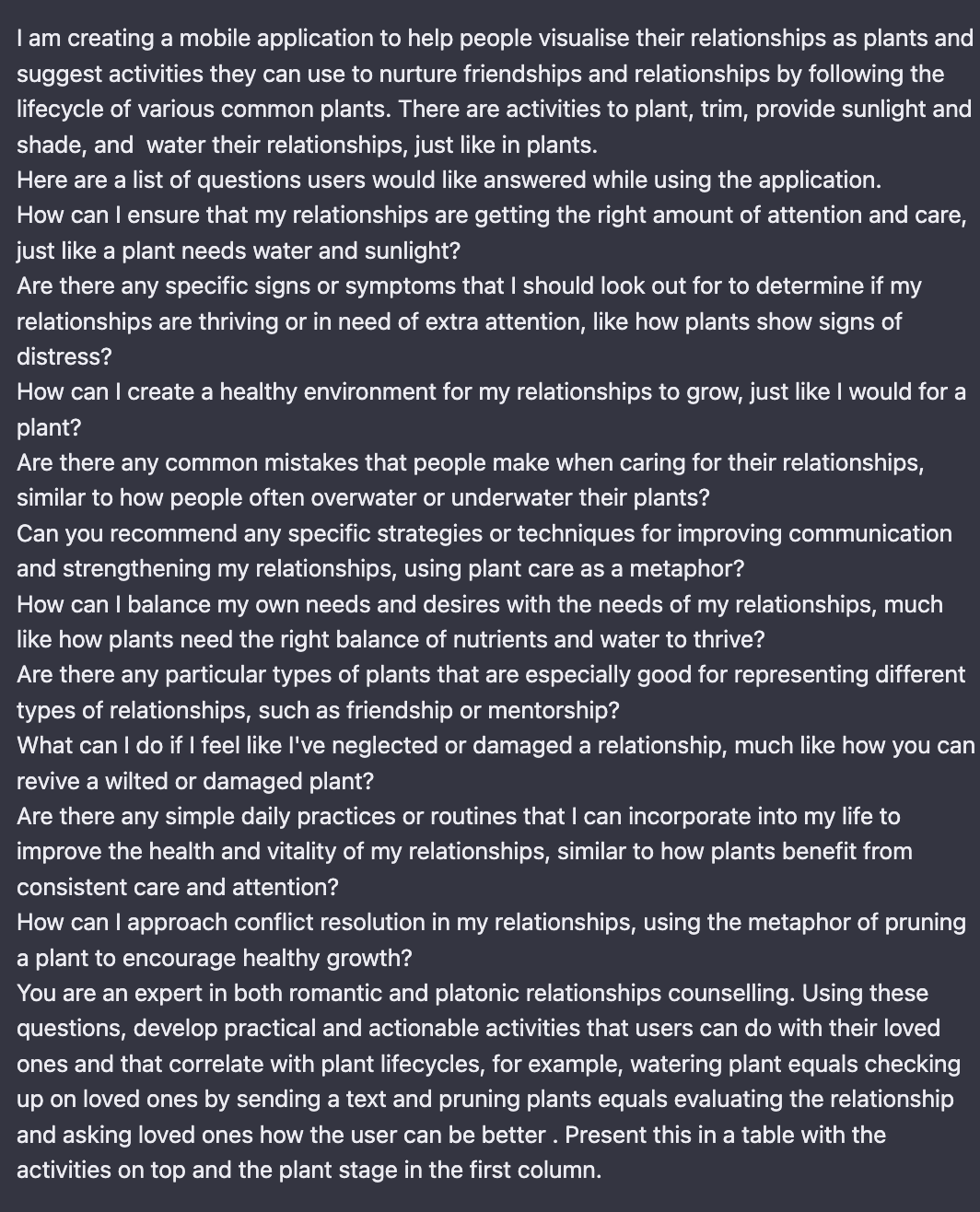

This means understanding your users and asking questions like, who the users are, what their needs are, what tasks they’d complete using a chatbot, how they currently complete these tasks, the words they use to talk about completing these tasks, and what situations would trigger this.

Prompt Engineering:

Prompting is essentially telling AI what you want it to do. During proper prompt engineering, instructions given to the AI are specific to your niche with relevant examples and lead to outputs tailored to your topic.

Prompts are the center of AI generative models and to build one, you want to understand what exactly goes into prompting an AI model to get desired outputs.

The instructions should be very specific when building prompts and include the following

Context: I’m building a…., you are an empathetic, expert botanist…

The problem you want to solve: …to describe the relationship between seasonal weather changes and plant health…

Knowledge and examples needed to complete the task: …for example using how dry weather can cause mottling…

The type of output you want by specifying format, tone and style: …present the results in a table…

Below, I used OpenAI’s GPT-3.5 turbo model (ChatGPT) to test prompt creation, and with more detailed instructions, got super specific responses relevant to my query.

Customizing raw /base AI models

OpenAI has several base or raw models designed to work with specific inputs and generate different outputs. For instance, there’s DALL-E which converts text to image, Whisper converting audio to text, and Codex converting text to code.

Then, there are the GPT-3 base models, optimized to understand and generate text or natural language with varying capabilities and speed. ChatGPT is modeled on the even more capable GPT-3.5 turbo (an improved version of GPT-3 optimized for chat)

To dive deeper and build your own model for your app or business, these base models need to be trained to give customized outputs tailored to your specific needs and audience.

2 ways to customize base AI models are few-shot learning (giving prompts with a few examples) and fine-tuning (preparing and uploading training data as datasets into the model).

Few-shot Learning

You can use either OpenAI or Riku.ai to train raw GPT-3 models via the few-shot learning method. Here youStart with very clear instructions e.g Give very detailed and in-depth relationship advice…

Think about the likely inputs your end user would give the AI to work with, maybe Question about relationship or relationship tips followed by a colon to help the AI learn the pattern, e.g Relationship question:

Follow up with an example of this input and then on the next line use a differentiator (###) to close it.

On the next line you then have an example of your output followed again by a differentiator (###) below it. Essentially, the pattern you want is input, separator, output, separator.

You can feed it with as many examples as your token allowance will allow (character limit), the more, the better and then hit the generate button and save your model when you get a prompt you are happy with.

This way, the model learns to give outputs patterned after the examples in your prompt. Using the example above, I was able to train the GPT-3 curie model on Riku and created an interface for it so when a query is inputed, it generates outputs based on the examples I fed it in the training prompt.

Fine-tuning

The difference between fine-tuning and few-shot learning is the absence of detailed instructions or multiple examples within the same prompt. Instead of having to write examples within prompts, fine-tuning allows you train models with large datasets, so you can use more examples than would fit a prompt.

Usually, the data is prepared as a JSONL file where each line is an example of an input-output pair (prompt-completion pair) and separated with ### with the output starting on a new line.

Using another example with 2 inputs, we’d have something like this, which is converted to a JSONL file on Riku.ai.

It’s usually recommended to add at least 50 examples to your training data, but also keep in mind that different models have token limits for each prompt and completion pairing. One token is equal to 4 characters of the English alphabet, so if for instance, you are fine-tuning OpenAI’s Davinci base model which has a token limit of 4,000, it means you only have 16,000 characters to work with per prompt-completion pairing, otherwise, you get an error.

After defining my prompt inputs and fine-tuning the base model, here’s how my customized AI bot looked.

In your now fine-tuned model, you can tweak parameters like temperature which determines how varied and less repetitive each output is, frequency penalty which reduces the likelihood of your model repeating lines verbatim, presence penalty which increases the likelihood of your model to talk about new topics, and also adjust the maximum length of tokens your model can generate.

Both OpenAI and Riku allow you integrate your new prompt settings into your app or website using your API keys.

Designing conversations

To finally design conversations for your chatbot, you need to go back to the requirements you had initially gathered, thinking about the tasks users would like to accomplish and the kinds of conversations they would have with the chatbot considering the context of the product.

Here, I asked questions like; What kind of personality would a bot designed to assist me in maintaining my relationships have? What voice would it have? How would its tone change as context varied? How well would it anticipate the needs of the users? What kind of language would users expect it to use considering we were targeting a niche audience of plant enthusiasts? What would be the trigger for users to engage with the bot?

This step also involves user research and conversation mining (finding the spaces your target audience express themselves freely and observing the kind of words and language they use when they talk about your product, service or the tasks they’d like to do). Ideally, you want your chatbot to use the same language as your users, because it’d be weird using prescription to describe an ingredient list on a food/ recipe app when your users say recipe.

After getting a high level overview of the kind of dialogues we wanted, and sketching them on paper which took a lot of thinking about different scenarios, use cases, and edge cases, I used Landbot.io to create a high-fidelity conversation flowchart.

A short demo of the resulting WhatsApp bot:

In conclusion, gathering requirements, prompt engineering, and customizing raw AI models are some major steps involved in designing conversations and giving personality to AI-powered chatbots, and hopefully, this article is a helpful guide to understanding how to get started.

I’m currently open to UX, content, and conversational design roles and excited to work on cool projects.

You can send me an email at valerie.dehindejoseph@gmail.com and also check out my website at valeriedjoseph.com.